“`html

The Toughest Challenges to Overcome with Artificial Intelligence Are Here — And They’re Shaping the Next Wave of Tech

Estimated reading time: 25 minutes

Key Takeaways

- Data quality and bias: Poor or biased data leads to unfair and harmful AI outcomes (source).

- Ethical and privacy concerns: Fair use and strong privacy protections under laws like GDPR and CCPA are essential (source; source).

- Explainability: Models must be understandable, especially in sensitive fields (source).

- Security: Defending AI data, models, and pipelines from adversarial attacks is critical (source; source).

- Infrastructure costs: Building and running cutting-edge AI models is expensive and resource-heavy (source).

- System integration: Legacy systems often hinder AI adoption due to complexity and incompatibility (source).

- Talent gap: There is a shortage of skilled AI professionals slowing progress (source).

- Scalability: Maintaining speed, reliability, and cost-effectiveness at scale is challenging (source).

- Regulatory compliance: Complex and evolving rules require vigilance and documentation (source; source).

- Rapid technological change: Constant evolution requires ongoing learning and adaptability (source; source; source; source).

Table of Contents

- The Toughest Challenges to Overcome with Artificial Intelligence Are Here — And They’re Shaping the Next Wave of Tech

- Key Takeaways

- The 10 Biggest AI Challenges

- Additional Sector-Specific and Emerging Challenges

- A Quick Reference: Key AI Challenges Summarized

- How These Challenges Connect

- What It Takes to Move Forward

- A Closer Look: Stories Behind the Stats

- Sector Spotlight: Healthcare’s “Hard Mode”

- Why This Moment Feels So Big

- Checklist for Leaders Getting Started

- The Road Ahead

- A Final Word

- FAQ

The 10 Biggest AI Challenges

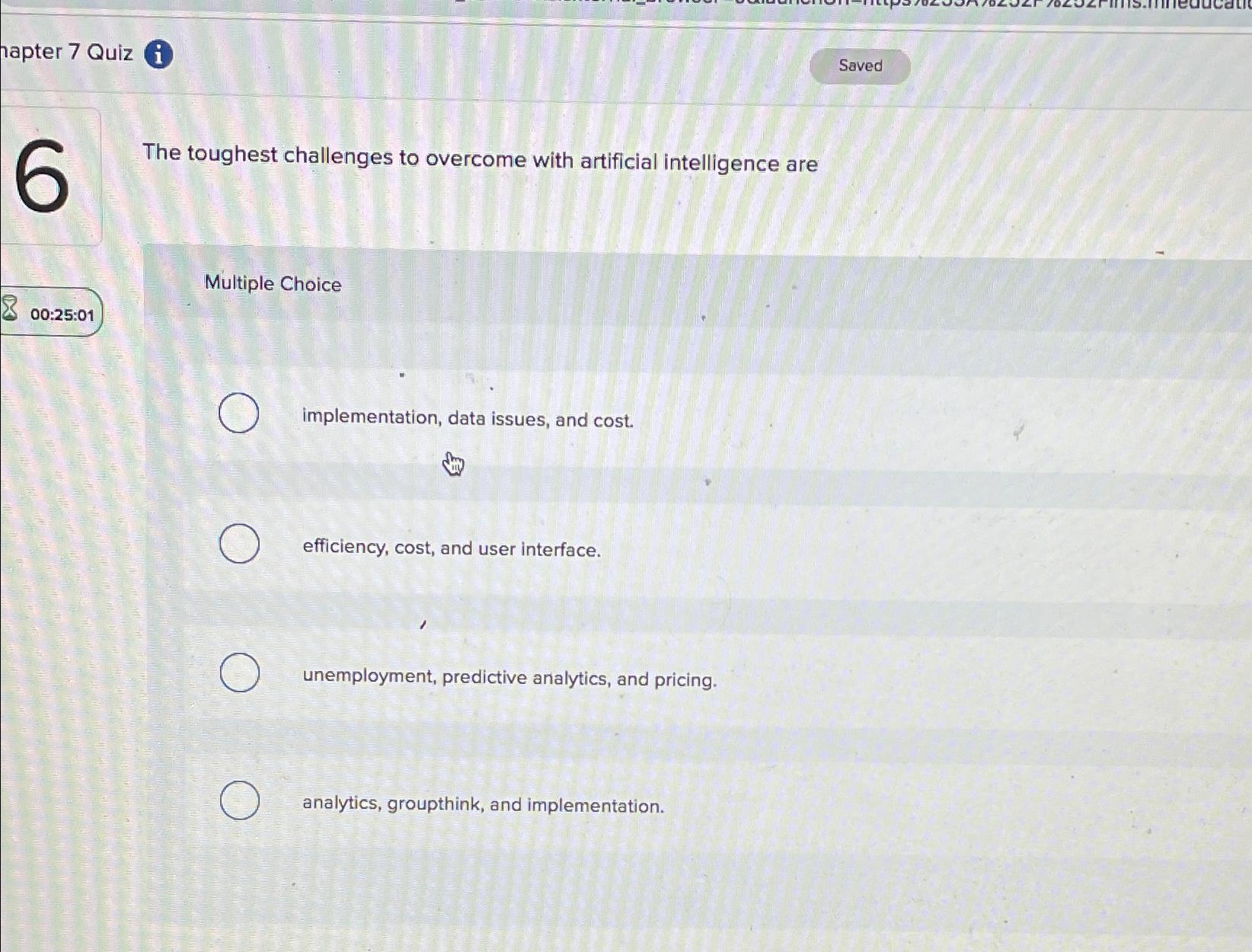

Artificial intelligence (AI) is moving fast. Every week brings bold wins, new tools, and big dreams. But behind the headlines sits a simple truth: the toughest challenges to overcome with artificial intelligence are also the most important ones to solve. They touch our data, our money, our safety, our laws, and how we work. They decide who gets value from AI, and who gets left behind.

This week, we go deep into those challenges. We look at the toughest roadblocks that teams face right now. We explain them in clear, simple words. And we include trusted sources so you can dig in more.

What are the biggest hurdles? They cross many areas: technical, ethical, financial, regulatory, and organizational. They include data quality and bias, explainability, privacy and security, massive hardware costs, complex system integration, scaling, a talent gap, fast-changing rules, and the rapid pace of change itself (source: overview informed by multiple analyses:

Onclusive;

ConvergeTP;

Deloitte;

Ironhack).

1) Data Quality, Availability, and Bias

AI runs on data. If the data is poor, the results are poor. If the data is biased, the outcomes can be unfair. That can be harmful. It can also be dangerous when used in areas like health, hiring, or finance. AI models need a lot of high-quality, diverse data to work well. When data is incomplete or one-sided, the model can learn the wrong patterns. It can then repeat those mistakes at scale. This can also increase real-world risks for people and for organizations (source).

Bias is a serious issue. It can amplify existing discrimination. It can also create new forms of unfairness. Good data governance matters. Teams need clear rules and checks to keep data fair, diverse, and fit for use. They need ways to test and fix bias early, and then monitor it over time (source). Learn more.

2) Ethical and Privacy Concerns

AI does not just predict. It can affect real people in real ways. This raises big ethical questions. How are decisions made? Who is harmed if a model is wrong? Who is accountable if a model causes harm? These questions grow louder as AI touches schools, hospitals, banks, and public services (source).

Privacy is also a central concern. Laws such as GDPR in Europe and CCPA in California set strict limits on how personal data can be used. Companies must secure data. They must anonymize it when needed. They must keep it safe from leaks and misuse. Meeting these rules is hard work. It takes careful design, strong process, and good tools. But trust depends on it (source; source). Learn more.

3) Explainability and Transparency: The “Black Box” Problem

Many of the most powerful AI models are very complex. They are made of layers upon layers of math. That makes them hard to explain. Stakeholders want answers, in plain words. Why did the model deny a loan? Why did it flag a test result? Why did it pick one option over another? When people cannot understand a model’s decision, they may not trust it. In fields like finance and healthcare, a lack of clarity can block deployment. It can also raise regulatory risk (source).

Explainability and transparency are key for trust. They are also key for safety. Teams need ways to reveal how models behave, and why. This is hard. But without it, adoption stalls. End users, regulators, and leaders will say no to a black box (source).

4) Security and Adversarial Attacks

AI systems sit inside long data pipelines. They take in data, transform it, train, tune, and then serve outputs. Attackers know this. They search for weak spots in the chain. They try to poison training data. They try to trick models with tiny, targeted inputs called adversarial examples. They try to steal data or model weights. Any of these can skew results, break trust, or cause harm (source; source).

The stakes are high. If attackers can shape the data or the model, they can shape the outcome. This can lead to wrong diagnoses, bad credit decisions, safety failures, or misinformation. Security must cover the full AI life cycle, not just the app layer (source; source). Learn more.

5) Massive Capital and Compute Requirements

Training cutting-edge models takes huge compute power. It takes large memory, fast networking, and costly hardware. It also takes a lot of data and strong R&D teams. This costs a lot of money. Few groups can afford it. This can concentrate power in the hands of a small set of players. It can widen gaps between regions, sectors, and companies (source).

For many, the costs are not just up front. Ongoing costs add up, from fine-tuning to inference at scale. Cloud bills rise as use grows. Teams must plan for both capital expense and operating expense. This shapes how fast they can move, and where they invest (source).

6) Integration with Legacy Systems

Many firms run on old systems. These systems were not built for AI. They may not support the data flows or the compute loads that AI needs. They might have strict rules for change. They may lack APIs, or have tight security zones that make integration slow and costly. This is common in big companies and in sectors like manufacturing and government (source).

When integration is hard, projects stall. Teams end up building one-off fixes. These fixes can add risk and cost. Over time, they make the whole stack harder to manage. Good integration plans, modern data platforms, and clear governance are key (source).

7) Talent Gap and Skills Shortage

AI needs many skills. It needs research. It needs data engineering. It needs machine learning operations. It needs product and design. It also needs experts in safety and responsible use. There is a shortage of these skills. Hiring and keeping top people is hard. This slows down projects. It also raises costs. For many, the talent gap is the biggest barrier to entry (source).

The gap is not only technical. Leaders and teams need to learn how to pick good AI use cases. They need to measure value in clear ways. They must also manage risk and change. All of this takes skill and practice (source).

8) Scalability and Performance

Making AI work in a lab is one thing. Making it work for millions of users in real time is another. Scale adds stress to hardware, networks, and software. Latency matters. Uptime matters. Cost per request matters. Teams must tune models, code, and data paths for speed, cost, and reliability. This is a hard engineering task, and it never stops as demand grows (source).

As systems scale, small problems can become big ones. A tiny bug in one service can ripple through the stack. A delay in one region can slow the whole product. Strong observability and performance work are vital (source).

9) Regulatory and Compliance Risk

AI now sits under many rules, and more rules are coming. Laws differ by country and by sector. There are rules about data use, transparency, safety, and who is responsible for outcomes. There are rules for audits and reporting. The map is complex. Getting it wrong can lead to legal risk and brand damage (source; source).

Firms must track new rules and adapt fast. They need clear documentation, testing, and controls. They need to show how models are built, trained, tested, and monitored. This takes time and care (source).

10) Rapid Technological Change

AI is changing fast. New methods arrive often. New tools replace old ones. Standards shift. This can break old playbooks. It makes it hard for teams to keep skills fresh. It also makes long-term planning tricky. Leaders must build for change. They must budget for ongoing learning and upgrades (source: overview and synthesis across current analyses:

Onclusive;

ConvergeTP;

Deloitte;

Ironhack).

Additional Sector-Specific and Emerging Challenges

Digital Pathology and Domain Specialization

In medicine, AI can help read images, find patterns, and support doctors. But medical AI has its own challenges. Data quality must be very high. Labels on images must be reliable. Many images must be annotated by experts. This takes time and money. It also needs strong compute to train on large image sets. These hurdles make progress slow and costly in areas like digital pathology (source). Learn more.

Strategic Uncertainty

Leaders face hard choices. There are many possible AI use cases. Which ones bring real value? Which ones carry too much risk? How do we weigh business results and social impact? Without clear strategy, teams can chase hype. They can spread effort too thin. They can miss the wins that matter most (source).

A Quick Reference: Key AI Challenges Summarized

- Data quality and bias: Bad or biased data leads to wrong and unfair results (source).

- Ethical and privacy concerns: Use must be fair and compliant, with strong protection of personal info under laws like GDPR and CCPA (source; source).

- Explainability: Models must be understandable to humans, especially in sensitive fields (source).

- Security: Defend data, models, and pipelines from attacks and manipulation (source; source).

- Infrastructure costs: Building and running modern AI can be very expensive (source).

- System integration: Legacy systems often do not fit AI needs, making projects slow and complex (source).

- Talent gap: There are not enough skilled people to meet demand (source).

- Scalability: It is hard to keep AI fast, reliable, and cost-effective at global scale (source).

- Regulatory compliance: Rules are evolving and complex across regions and sectors (source; source).

- Rapid technological change: The field moves fast, pushing constant retraining and upgrades (source; source; source; source).

How These Challenges Connect

These challenges do not sit alone. They blend together:

- If data is biased, you face ethics risks and compliance risks.

- If models are not explainable, regulators may say no, and users may not trust them.

- If security is weak, one breach can ruin trust and trigger legal trouble.

- If costs are high and skills are scarce, you cannot scale even good ideas.

- If legacy systems cannot support AI, value gets stuck in pilots.

- If rules change fast, and tech changes faster, long projects can fall behind before they launch.

Together, these issues slow down safe, effective adoption. Getting past them takes strong teamwork. It takes leaders, engineers, data experts, ethicists, lawyers, and operators working as one. It also takes steady investment and a mindset of learning and change (source: high-level synthesis across these analyses:

Onclusive;

ConvergeTP;

Deloitte;

Ironhack).

What It Takes to Move Forward

While each challenge is hard, there are practical steps that help. These steps align with the issues and the sources above:

- Build strong data governance: Set rules for how data is collected, cleaned, labeled, and used. Test for bias. Fix issues early. Keep testing over time (source).

- Design for privacy: Use methods that protect personal info. Limit access. Anonymize where possible. Map to GDPR and CCPA requirements. Document decisions (source; source).

- Aim for explainability: Choose models and tools that support transparency. Provide clear, human-friendly reasons for decisions in high-stakes contexts (source).

- Secure the whole pipeline: Protect data sources, storage, training flows, model artifacts, and endpoints. Test for adversarial attacks. Plan for incident response (source; source).

- Right-size infrastructure: Model the true costs of training and inference. Consider where to use cloud, where to use on-prem, and when to optimize models to cut cost and latency (source).

- Modernize integration: Invest in data platforms, APIs, and event-driven systems that can support AI. Plan for change management across functions (source).

- Close the talent gap: Upskill teams. Build strong hiring programs. Partner with schools and communities. Train leaders on AI value and risk (source).

- Design for scale: Build observability and performance engineering in from day one. Use caching, batching, model optimization, and smart routing to keep costs and latency in check (source).

- Prepare for regulation: Track new rules. Build documentation and auditing as a core feature, not an afterthought. Work with legal and compliance teams early (source; source).

- Embrace ongoing change: Plan for learning, retraining, and upgrades. Keep your stack flexible. Expect to pivot as tech and standards evolve (source; source; source; source).

A Closer Look: Stories Behind the Stats

- Think of an AI model trained on skewed data. Maybe the data had fewer examples from one group. The model then learns to overlook that group. In hiring or lending, that leads to unfair results. With better data checks and diverse sets, this risk can be reduced (source).

- Imagine a hospital using AI to flag scans. If the model is not explainable, doctors may not trust its alerts. In such cases, explainability tools that show important regions on an image can help build trust and improve care (source).Learn more.

- Picture an attacker who tweaks pixels in a way humans cannot see. A model might then misread an image. Without adversarial testing and robust security, this trick can slip through. Good security covers data checks, training process, and model guards at runtime (source; source). Learn more.

- Consider a large company with mainframes and old ERPs. An AI add-on might not fit. Data may be locked in old formats. Integrations need careful work to avoid errors that cascade. This slows timelines but is a common and solvable issue with modern data platforms and APIs (source).

Sector Spotlight: Healthcare’s “Hard Mode”

Healthcare shows how tough and how vital these issues are. Data must be accurate, secure, and private. Models must be explainable. Regulations are strict. Workflows are complex. In digital pathology, in particular, image quality, labeling quality, and the need for heavy compute make the task even harder. But the potential upside is huge: faster reads, earlier detection, and better outcomes when done right (source). Learn more.

Why This Moment Feels So Big

We stand at a tipping point. The tools are stronger than ever. The interest from leaders and users keeps rising. But bigger ambition brings bigger responsibility. The path to real, safe, and fair impact runs straight through the hard stuff described above. The winners will be the ones who take these challenges seriously, plan well, and keep learning as the field evolves (source: high-level synthesis across analyses:

Onclusive;

ConvergeTP;

Deloitte;

Ironhack).

Checklist for Leaders Getting Started

If you lead an AI effort, here is a simple starter list aligned to the key challenges and sources above:

- Set a clear AI strategy with focused use cases and success metrics (source).

- Build a data charter that covers quality, diversity, privacy, and governance (source).

- Choose models and tools that support explainability where it matters most (source).

- Map your security plan to the full AI pipeline, not just the app surface (source; source).

- Model your cost curve for training and inference at the scales you expect (source).

- Prepare your integration path from day one, with APIs and data platforms in scope (source).

- Launch an upskilling plan for both builders and business leaders (source).

- Design for performance and reliability as core product features (source).

- Build for compliance and auditability into your daily workflow (source; source).

- Expect change. Budget time and money for regular updates, retraining, and new methods (source; source; source; source).

The Road Ahead

AI is a set of tools. But it is also a set of choices. We choose how to collect data. We choose how to build and test models. We choose how to explain results. We choose how to protect people and meet the law. And we choose where to invest so that benefits are shared, not hoarded.

The toughest challenges to overcome with artificial intelligence are not side quests. They are the main story. They decide if AI earns trust. They decide if AI scales. They decide if AI helps people in ways that are fair and lasting.

This is why data quality and bias sit at the heart of the work (source). This is why ethics and privacy must be woven into the design from day one (source; source). This is why explainability, security, and compliance cannot be afterthoughts (source; source; source). And this is why investment in people, integration, and scale will separate pilots from products (source; source).

A Final Word

We live in a thrilling time for AI. The speed can feel dizzying. The promise can feel huge. But the real story is not just about new models or bigger datasets. It is about doing the hard work that makes AI safe, fair, and useful in the real world.

If we face the challenges head-on—data, ethics, explainability, security, cost, integration, talent, scale, regulation, and rapid change—we can unlock value for everyone. Doing so takes a team mindset across roles and fields, plus steady investment and a plan to adapt as the world changes (source: synthesis across leading analyses:

Onclusive;

ConvergeTP;

Deloitte;

Ironhack).

This is how we move from promise to progress. This is how we make AI work for people. And this is why the toughest challenges to overcome with artificial intelligence are the ones worth tackling first.

FAQ

What is the biggest challenge in AI adoption?

The talent gap is often cited as the biggest barrier, but data quality, bias, and ethical concerns are equally critical to address for safe and effective AI.

How can companies address bias in AI?

By implementing strong data governance, using diverse datasets, and continuously testing models for bias early and over time (source).

Why is explainability important for AI models?

Explainability builds trust among users and regulators, especially in sectors like healthcare and finance where decisions have high stakes (source).

What makes AI security complex?

AI systems involve long data pipelines vulnerable to poisoning, adversarial attacks, and data theft, so security must cover all stages of the AI lifecycle (source; source).

How do regulatory challenges impact AI deployment?

Varying and evolving laws require comprehensive compliance efforts including documentation, testing, and audits to avoid risks and penalties (source).

“`

}